The SuperKEKB particle accelerator in Tsukuba, Japan, was constructed to achieve the highest particle collision rates in the world, enabling next-generation investigation of fundamental physics. SuperKEKB is unique in its employment of a nano-beam scheme that squeezes beams to nanometre-scale sizes at the interaction point, along with the use of a large crossing angle between the colliding beams to enhance electron–positron collision efficiency.

In its quest to reach the world’s highest collision rates, SuperKEKB has repeatedly suffered from Sudden Beam Loss (SBL) events. An SBL event occurs when vertical beam current is reduced by ten percent or more, leading to the process being aborted within a few turns lasting only 20 to 30 milliseconds. It is unknown what specifically invokes an SBL event. According to one theory, beam orbit oscillation causes beam sizes to significantly increase a few turns before an SBL occurrence. Yet it was also observed size escalation started earlier than beam oscillation. Increases have been measured to be up to ten times larger than the usual beam size.

SBL is the biggest obstacle to the longterm stability of SuperKEKB beam operation. It also has the potential to seriously harm accelerator components within the electrons or positrons rings, which are situated side-by-side within a tunnel. Determining the source behind SBL incidents and putting suppressive measures in place were crucial.

IDENTIFYING THE ORIGIN OF SBL

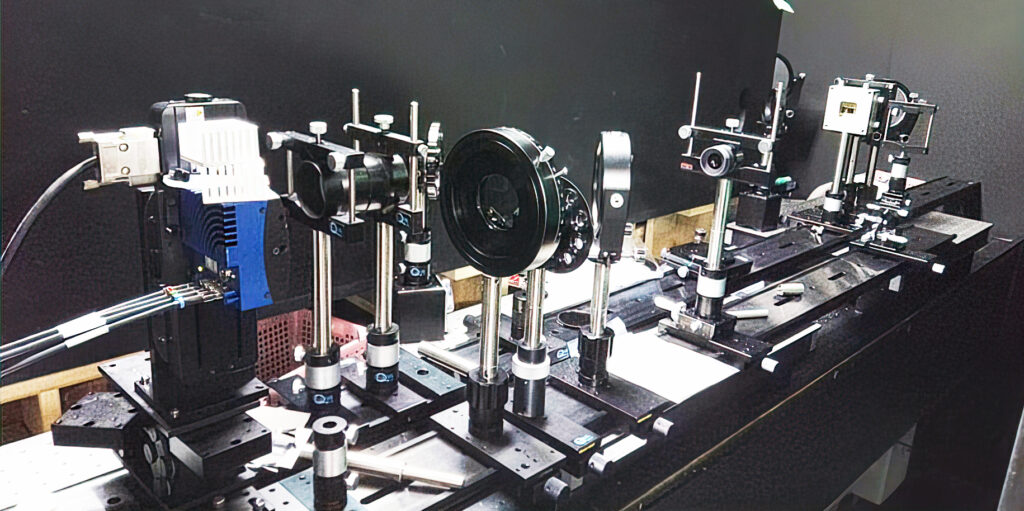

To help uncover the root cause of SBL and ensure redundancy, the SuperKEKB team developed two turn-by-turn beam size monitors operating at different wavelengths; one, an X-ray system for beam size diagnostics, and the other, a visible light monitor focusing on beam orbit variation and size increases.

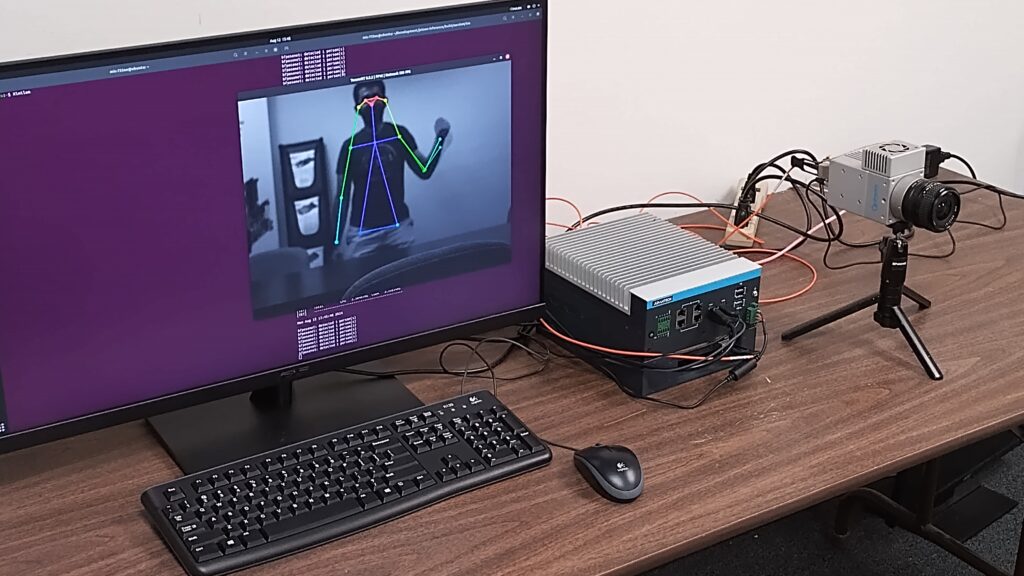

The 99.4 kHz revolution frequency of the particle accelerator made it necessary to use imaging components compliant with the CoaXPress 2.0 (CXP-12) high-speed standard. In both the X-ray and visible light systems, data transfer rates up to 50 gigabits per second were achieved by aggregating four links between a Mikrotron EoSens 1.1 CXP2 CMOS camera and a BitFlow Claxon CXP4 PCIe quad link frame grabber. During data acquisition, the Mikrotron’s camera shutter was operated in precise synchronization with SuperKEKB’s 99.4 kHz revolution frequency. Captured image data was continuously stored in the BitFlow frame grabber’s 2GB ring buffer. It was only when a beam aborted did the data in the ring buffer move to the disk server for offline analysis.

The Claxon CXP4 is also capable of handling 4 x 1-link cameras, 2 x 2-link cameras or any combination of these. Each link supports data acquisition of up to 12.5 Gb/s. The highly deterministic, low latency frame grabber will also provide a low speed uplink on all links, accurate camera synchronization, and 13W of Safe Power to all cameras per link.

By reducing the size of the camera’s Region-of-Interest (ROI), the X-ray monitoring system captured 99,400 frames per second, while the visible light system used an ROI twice the size of the X-ray, operating at a speed of 49,700 frames per second. The beam profile was measured with one shot every two turns instead of every turn.

DIFFERENTIATING BEAM PATTERNS

The frame grabber’s CXP-12 transmission speeds empowered SuperKEKB physicists to accurately differentiate between the various beam patterns developing before SBL events occurred.

Combining observations from both the X-ray and visible light monitoring systems, a possible SBL event scenario evolved. Physicists theorized changes in the beam orbit may lead to a sudden increase in vacuum pressure in the damping section of the SuperKEKB with irradiation being the possible source. In this theory, when the beam hits a vacuum component, such as a beam collimator, the result is a sudden loss in beam current and an SBL event. However, this has not been fully clarified. To explore other possibilities, SuperKEKB is developing more advanced X-ray beam-size monitors that combines a silicon-strip sensor with a powerful ADC.

Visible light beam size monitor showing four cables connected to a Mikrotron CXP-12 camera running into a BitFlow Claxon CXP4 PCIe quad frame grabber to achieve 50GB/sec data transfer rates (Image courtesy of SuperKEKB

Claxon CXP4 frame grabber