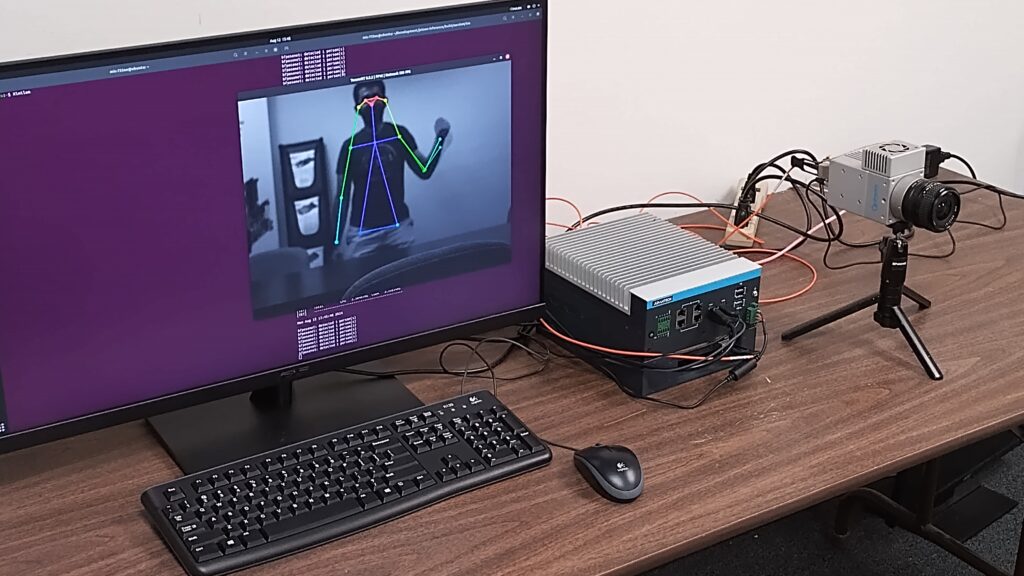

WOBURN, MA, JANUARY 8, 2025 — In collaboration with its parent company, Advantech, BitFlow announced today that it has successfully integrated its Claxon Fiber-over-CoaXPress (CoF) frame grabber with an Advantech AI Inference edge computer and Optronis Cyclone Fiber 5M camera in developing a real-time human pose estimation project accelerated by NVIDIA TensorRT deep learning.

One of the most advanced of its kind, the pose estimation system can provide low latency analysis of athletic movement, gaming, physical therapy, AR/VR, fall detection, and online coaching. Traditional approaches to pose estimation required multiple cameras and special suits with markers, rendering it impractical for most applications. AI-driven computer vision has elevated this field where a single camera can now capture professional-grade, real-time pose estimation.

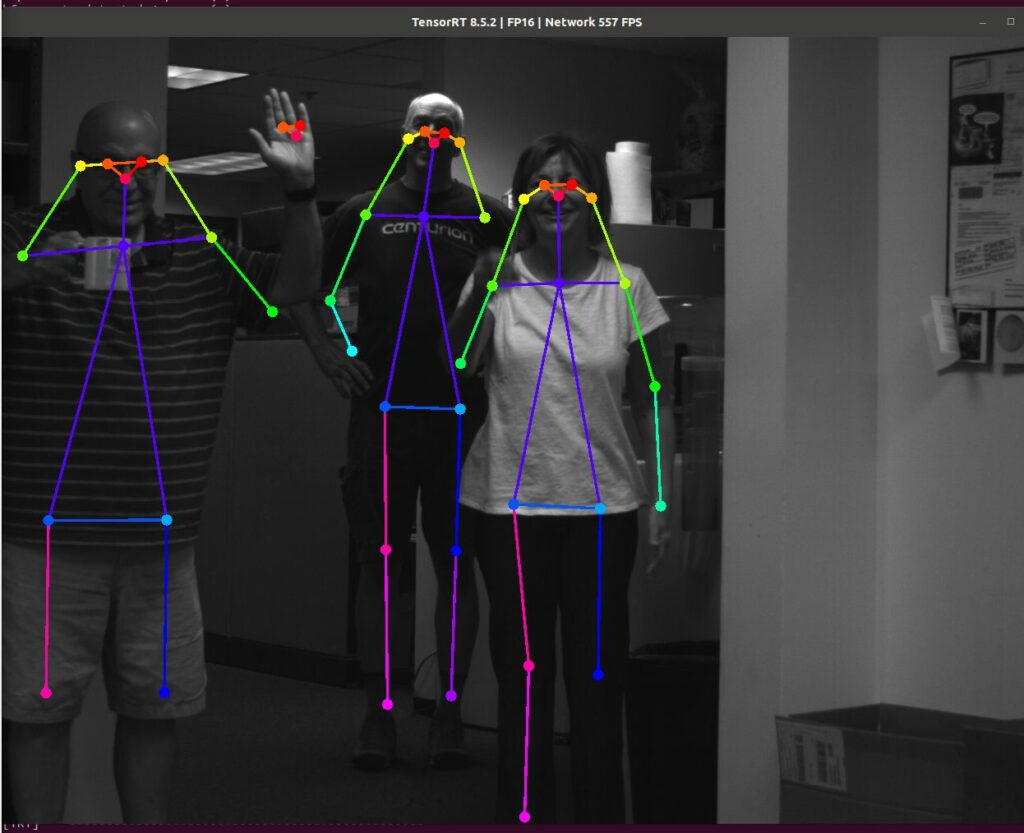

With a processing time of less than 2 milliseconds, the system is capable of acquiring 2560 x 1916 resolution images at 600 frames-per-second. Once output to the BitFlow Claxon CoF frame grabber, the Claxon’s Direct Memory Access transmits images directly into the Advantech computer’s GPU memory, reducing bottlenecks and freeing up the CPU to apply an NVIDIA pre-trained algorithm that searches each frame for people. If the algorithm locates a person, it calculates a crude skeleton location and overlays the displayed image with a “stick figure” representing the person’s bone structure.

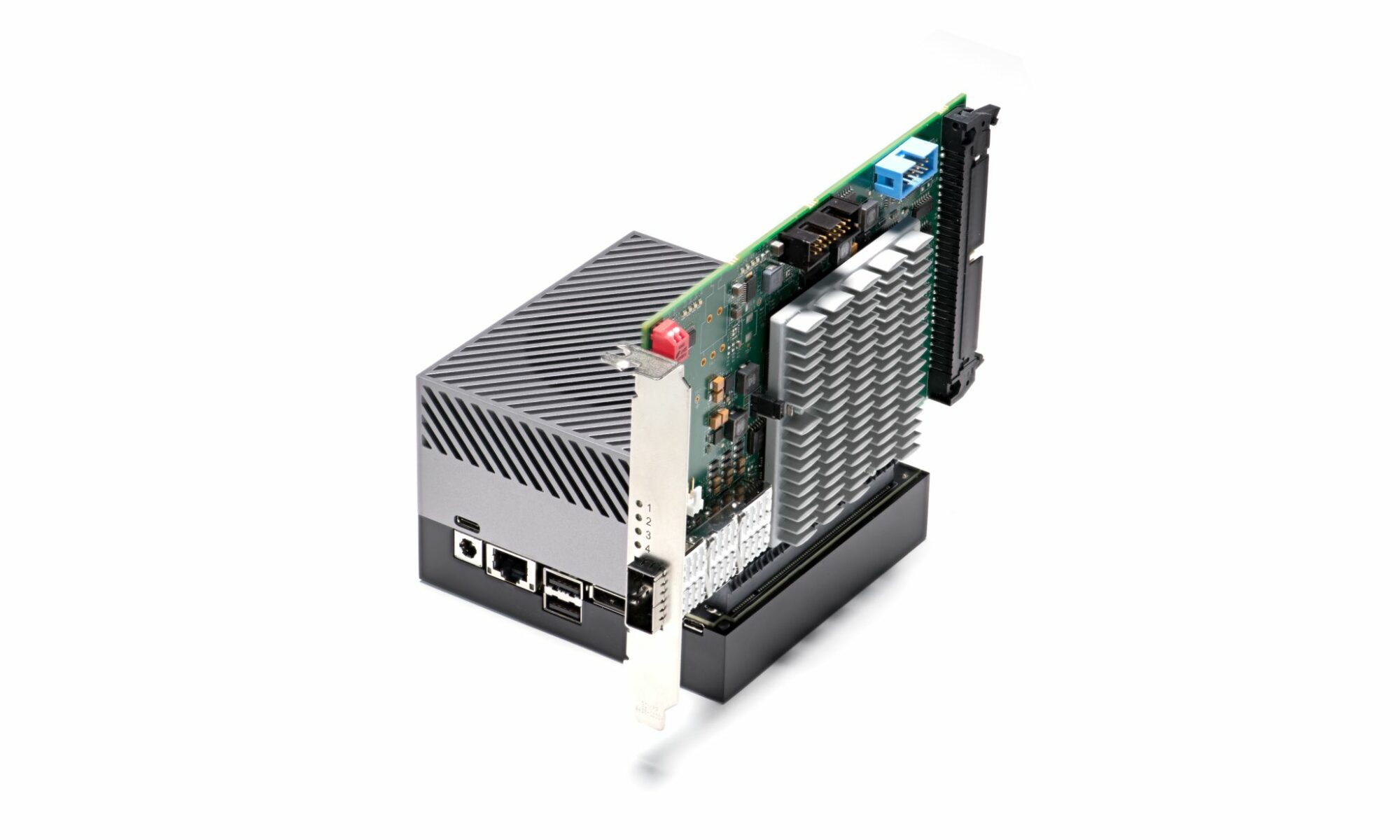

The Advantech MIC-733-AO AI edge computer is embedded with an NVIDIA Jetson AGX Orin that natively supports the NVIDIA TensorRT ecosystem of APIs for deep learning inference. An optional PCIe x8 iModule is available for the MIC-733-AO to accommodate BitFlow CoaXPress and Camera Link frame grabbers.

High throughput demands of the system required the use of the BitFlow Claxon CoF model. Designed to extend the benefits of CoaXPress over fiber optic cables, the Claxon Cof is a quad CXP-12 PCIe Gen 3 frame grabber that supports all QFSP+ compatible fiber cable assemblies. In addition to high speeds, fiber cables are immune to EMI and is capable of running lengths well over a kilometer, further than Ethernet’s 100 meter limitations.